Development of Efficient Deep Learning Models for Medical Image Deepfake Detection

Faculty: Phaneendra K. Yalavarthy (CDS) and Ambedkar Dukkipati (CSA)

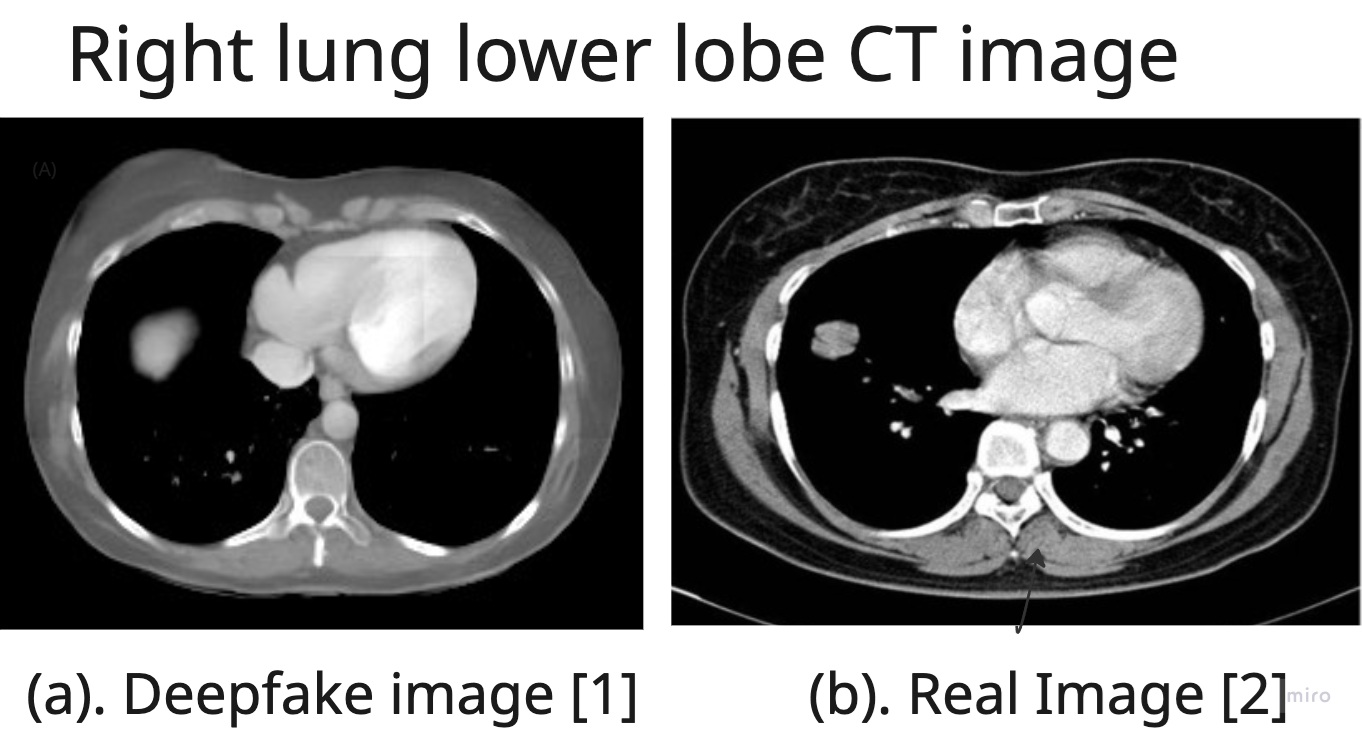

The rise of deepfake technology poses a significant threat to the integrity of medical imaging. Deepfakes in medical imaging could be used maliciously to alter diagnostic images, fabricate conditions, or compromise clinical research. Detecting such manipulations is critical to ensuring the reliability and trustworthiness of medical data. Traditional detection methods often struggle with generalization, especially when faced with diverse imaging protocols, compression artifacts, and transformations applied during image storage or transmission.

This project aims to develop mathematically robust and computationally efficient deep learning models for detecting deepfakes in medical imaging. The focus will be on leveraging advanced statistical and mathematical techniques to identify subtle inconsistencies introduced by generative processes while addressing challenges such as limited datasets, variability in imaging protocols, and real-time applicability.

Potential Research Directions:

- Attention Mechanisms: Incorporate attention mechanisms to focus on critical anatomical regions of the medical images that may reveal deepfake artifacts.

- Frequency Domain Analysis:

- Utilize Discrete Cosine Transform (DCT) and Fourier Transform to detect anomalies in the frequency domain that are characteristic of deepfake manipulations.

- Develop algorithms that exploit GAN-specific “fingerprints” left in the frequency spectrum, even under transformations like compression or resizing.

- Expectation-Maximization (EM) Algorithm: Apply EM-based feature extraction to detect convolutional traces left by generative models.

- Use probabilistic models to estimate latent variables that differentiate real from fake images.

- Contrastive Learning:

- Implement contrastive loss functions to learn robust representations by comparing real and fake image pairs.

- Use self-supervised contrastive learning to enhance model performance on small datasets.

- Spatio-Temporal Inconsistency Detection: For dynamic imaging modalities (e.g., 4D CT or ultrasound), employ spatio-temporal models to identify inconsistencies across frames using techniques such as Gated Recurrent Units (GRUs) or Transformers.

Background needed: Linear Algebra (and/or) signal processing.

Basic Qualifications: B.E./B.Tech. in EE/ECE/IN/CS/IT/BME (or) M.Sc. (Mathematics/Physics)

References:

- NVIDIA MAISI: pre-trained volumetric (3D) CT Latent Diffusion Generative Model. https://build.nvidia.com/nvidia/maisi

- Sa YJ, Sim SB, Kim TJ, Moon SW, Park CB. Late-developing tongue adenoid cystic carcinoma after pulmonary metastasectomy: a case report. World J Surg Oncol. 2014 Apr 21;12:102. doi: 10.1186/1477-7819-12-102. PMID: 24750665